From Good to Unbreakable: How Test Data Shapes Your API Quality

Test data is the unseen foundation of API reliability—master it, and you master quality. Embrace dynamic, AI-powered strategies to transform testing from a chore into your greatest competitive edge.

Let’s talk about the often-overlooked MVP of API development: test data. Think of it as your secret weapon, your rocket fuel, the “God Particle” that gives meaning to every single test run. Without it, your testing is nothing more than an idle car revving on the runway—going nowhere.

As your unofficial test-data evangelist, I’m not just here to talk about the why, but also the how—the practical, code-level best practices you can start using today.

Part 1: Core Principle – Data Is Everything

Your code can be clean, your architecture flawless, and your unit tests plentiful. But if you’re feeding your API garbage data, you’re essentially driving blindfolded. An API is a black box. Test data is what you throw in to see what comes out—whether it’s correct output, side effects, or spectacular errors.

- Good data asks: “Does it work under ideal conditions?”

- Bad data asks: “Does it fail gracefully and securely when things go wrong?”

- Weird data asks: “Did anyone even consider the

usernamefield receiving 10,000 characters or a bunch of emojis? 🤔”

Key takeaway: The quality and breadth of your test data directly determine the reliability and security of your API. This isn’t an afterthought—it’s engineering 101.

Part 2: Hands-On – Mastering Test Data with EchoAPI

Enough theory—let’s get our hands dirty. We’ll use EchoAPI, a powerful developer-friendly API collaboration platform, to demonstrate how to efficiently manage and reuse test data.

We’ll test a simple user login endpoint:

POST /api/login

Request Body (JSON):

{

"username": "string",

"password": "string"

}

1. Build a Test Data Strategy

Professional testing isn’t just about the “happy path.” You need data that covers every angle:

| Case Type | Purpose | Example Data (username / password) |

Expected Result |

|---|---|---|---|

| Positive | Validate core function | test_user / CorrectPass123! |

200 OK, return token |

| Negative #1 | Handle invalid username | wrong_user / CorrectPass123! |

401 Unauthorized |

| Negative #2 | Handle invalid password | test_user / WrongPassword |

401 Unauthorized |

| Boundary | Test robustness | a…a (150 chars) / any |

400 Bad Request |

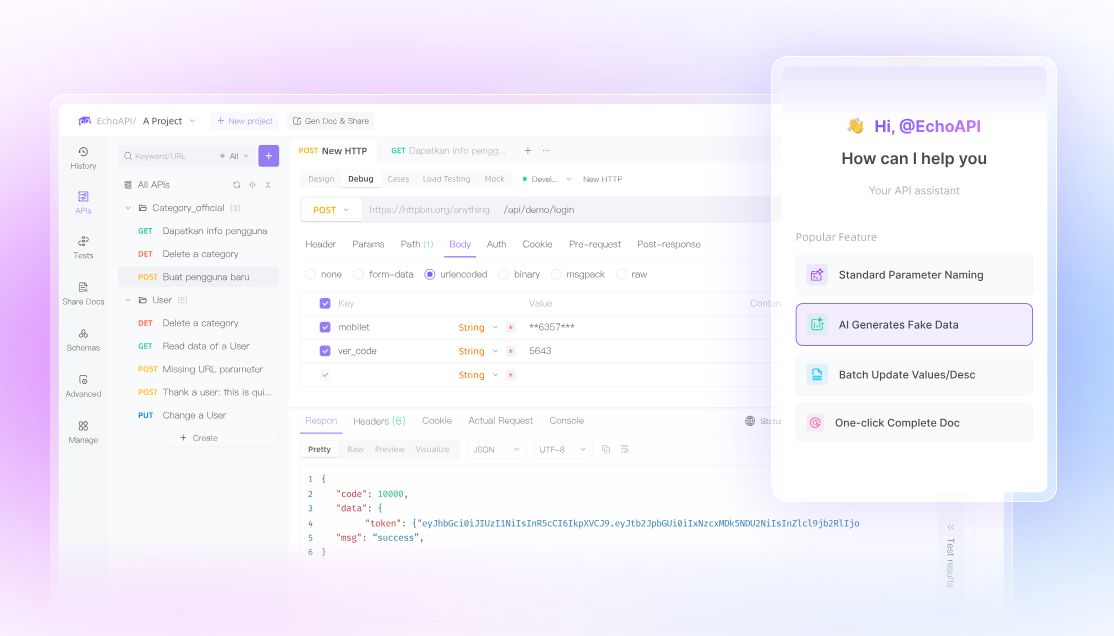

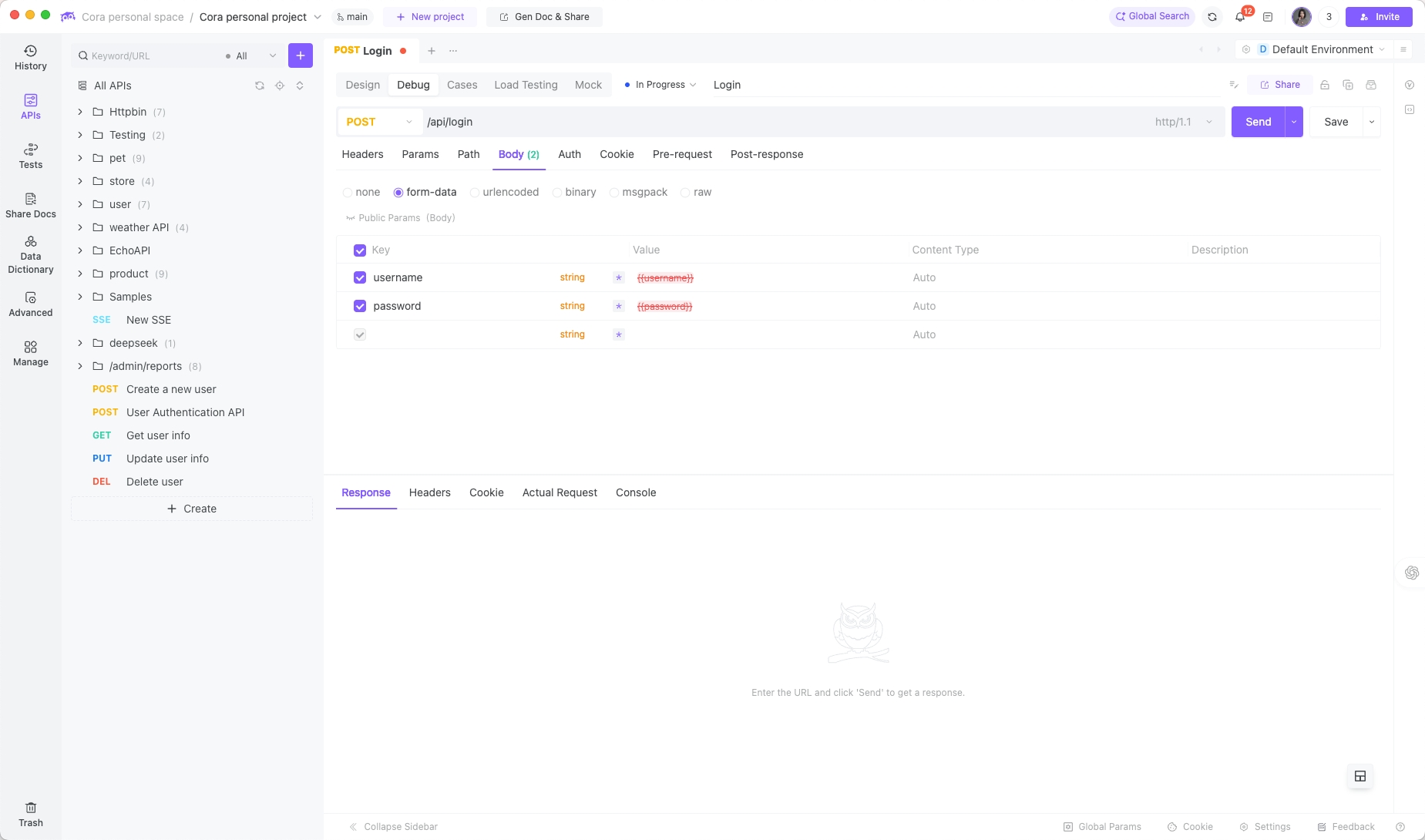

2. Upload and Execute Test Data in EchoAPI

The beauty of EchoAPI is its ability to visualize, manage, and reuse test data instead of hardcoding it.

Step 1: Create the request

In EchoAPI, create a new POST request and set your login URL.

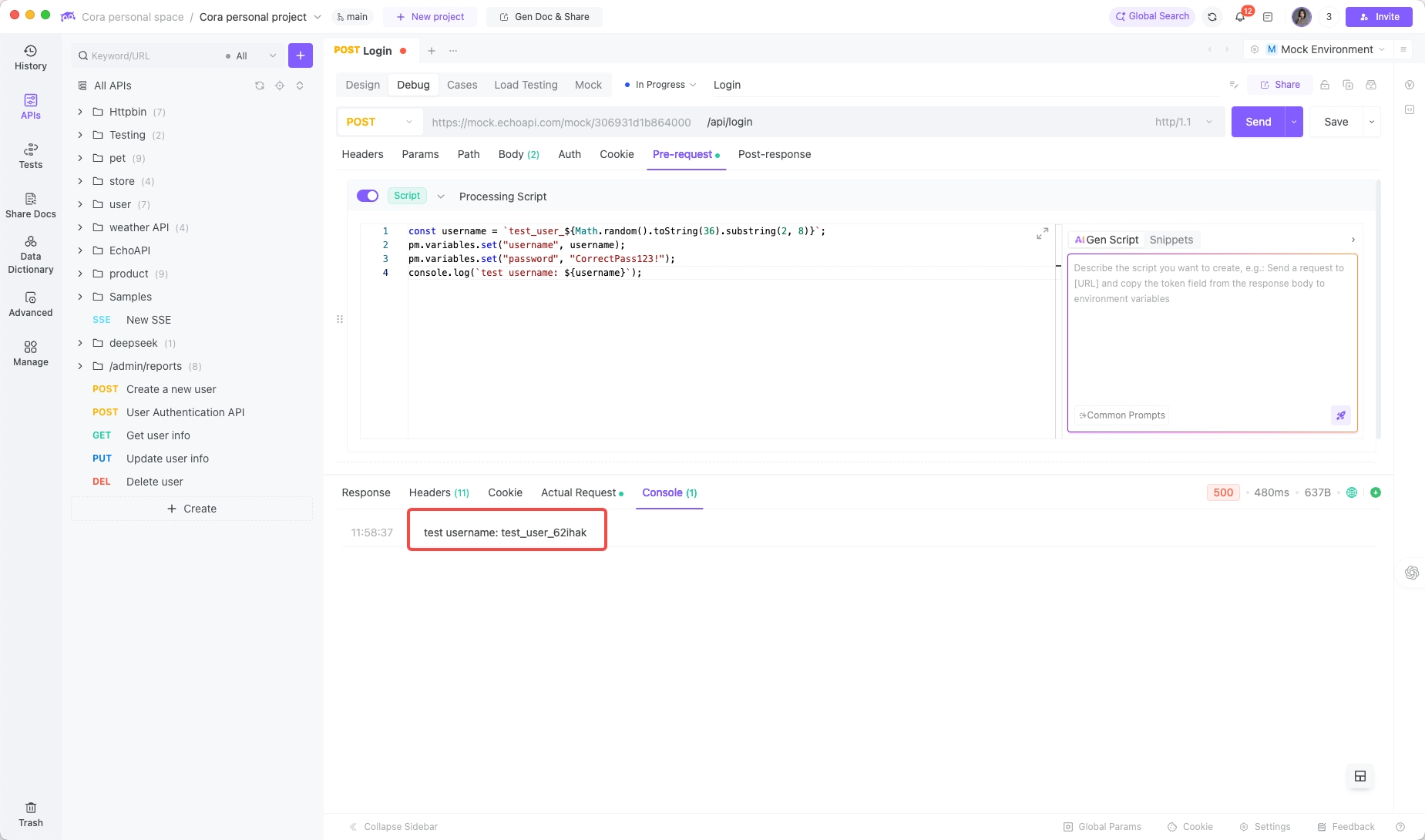

Step 2: Generate dynamic data with scripts

Use a Pre-request Script to generate unique test values.

const username = `test_user_${Math.random().toString(36).substring(2, 8)}`;

pm.variables.set("username", username);

pm.variables.set("password", "CorrectPass123!");

console.log(`test username: ${username}`);

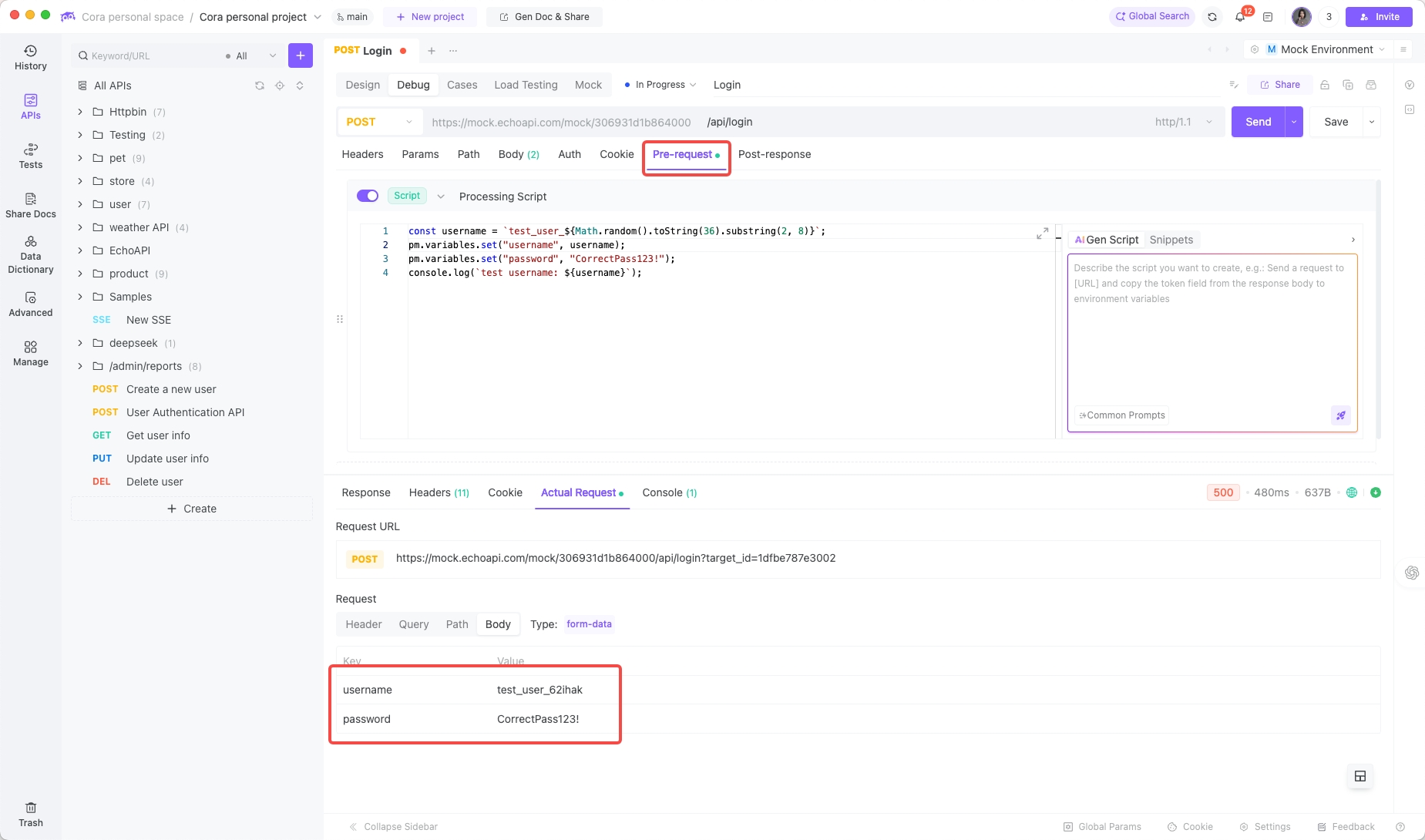

Step 3: Reference variables in the Body

{

"username": "{{username}}",

"password": "{{password}}"

}

Now every execution runs with a fresh username, perfectly simulating user signups and logins without data conflicts.

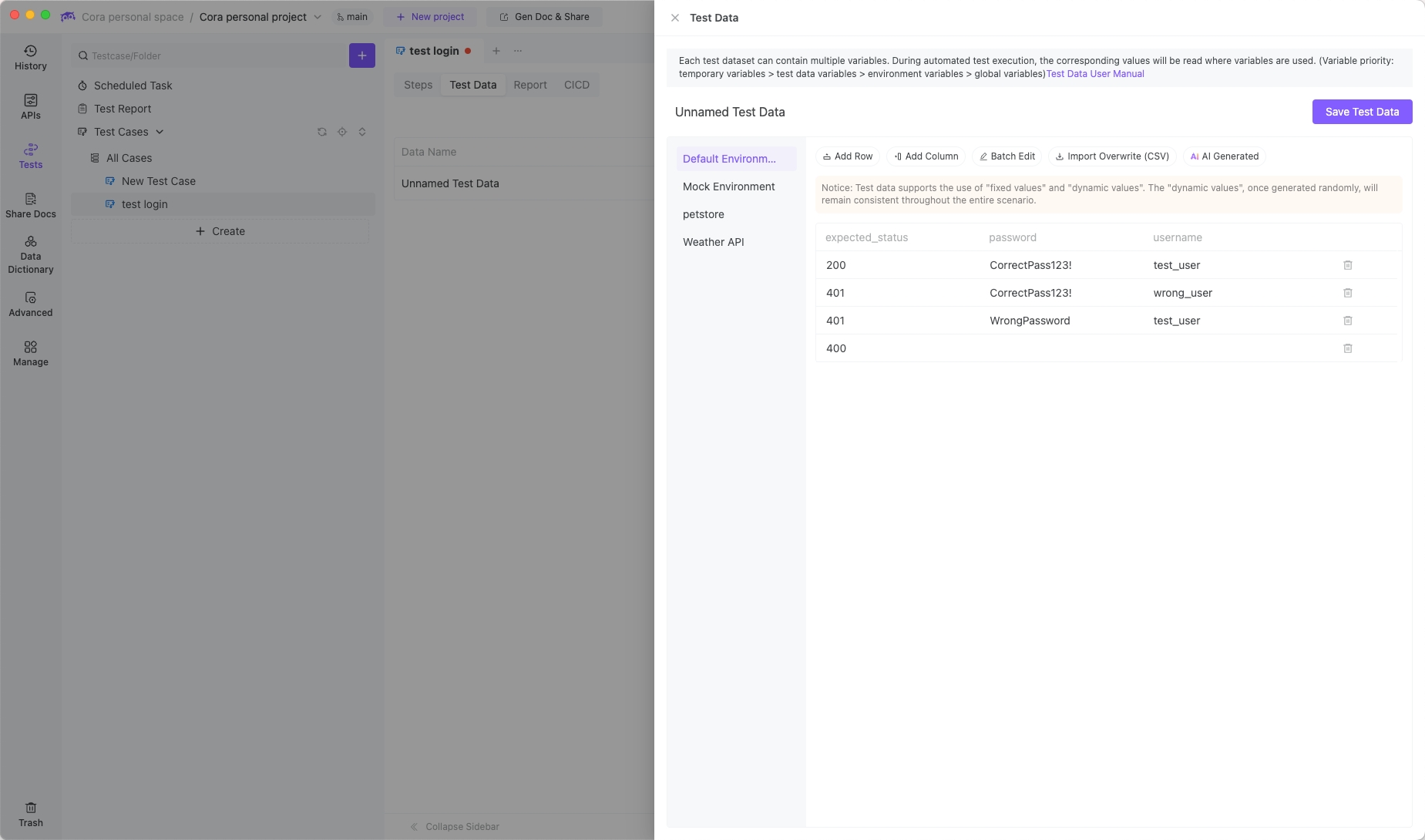

Step 4: Parameterized bulk testing

This is where EchoAPI shines—you can import CSV or JSON datasets and run parameterized test cases.

-

Create 'login_data.csv':

username,password,expected_status test_user,CorrectPass123!,200 wrong_user,CorrectPass123!,401 test_user,WrongPassword,401 ,,400 -

Upload the CSV into EchoAPI as a data source.

- Reference variables in the request:

{

"username": "{{username}}",

"password": "{{password}}"

}

- Write assertions in the Tests tab:

pm.test(`Status Code is ${pm.iterationData.get("expected_status")}`, function () {

pm.response.to.have.status(pm.iterationData.get("expected_status"));

});

if (pm.iterationData.get("expected_status") == 200) {

pm.test("Response includes token", function () {

var jsonData = pm.response.json();

pm.expect(jsonData.data.token).to.be.a('string');

});

}

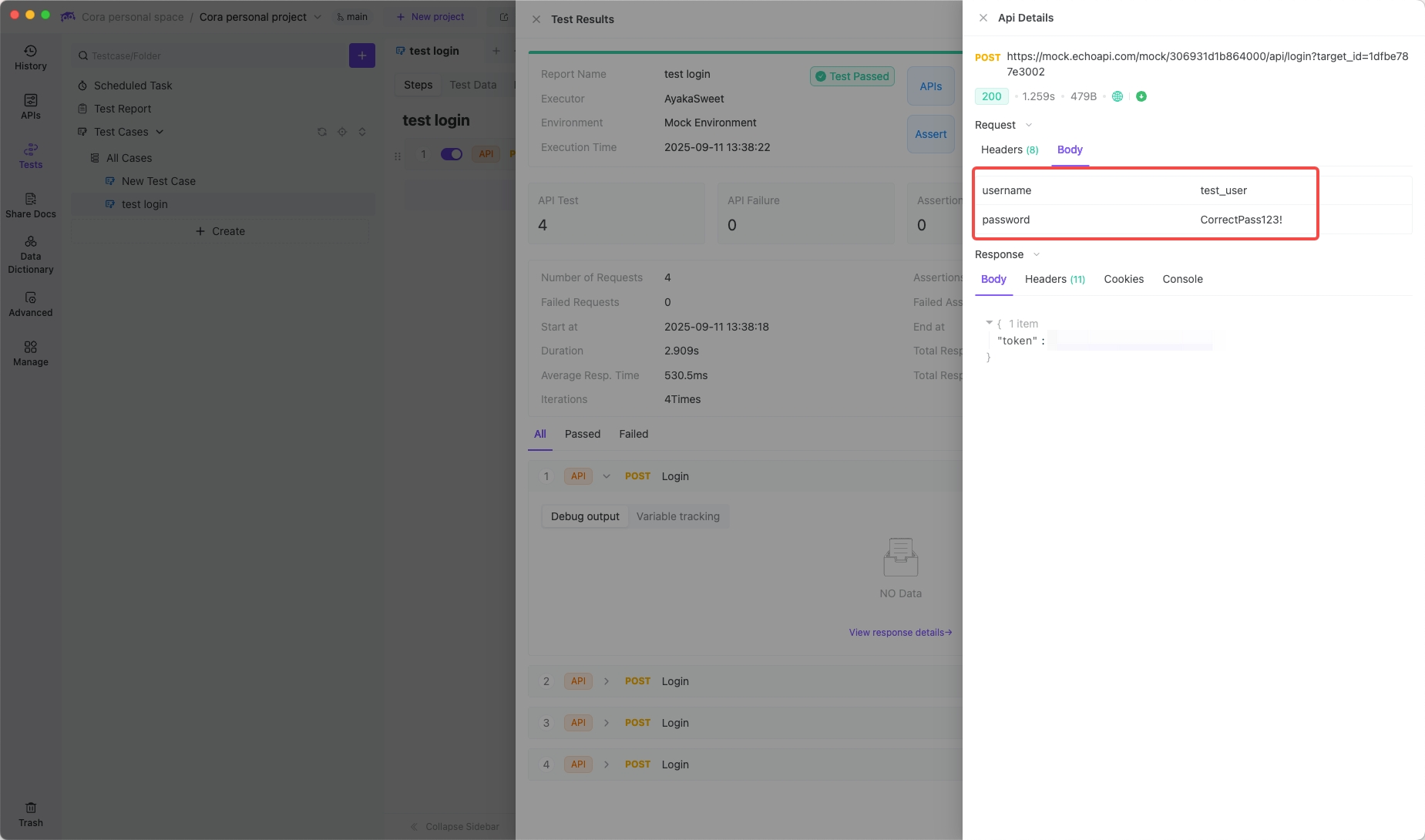

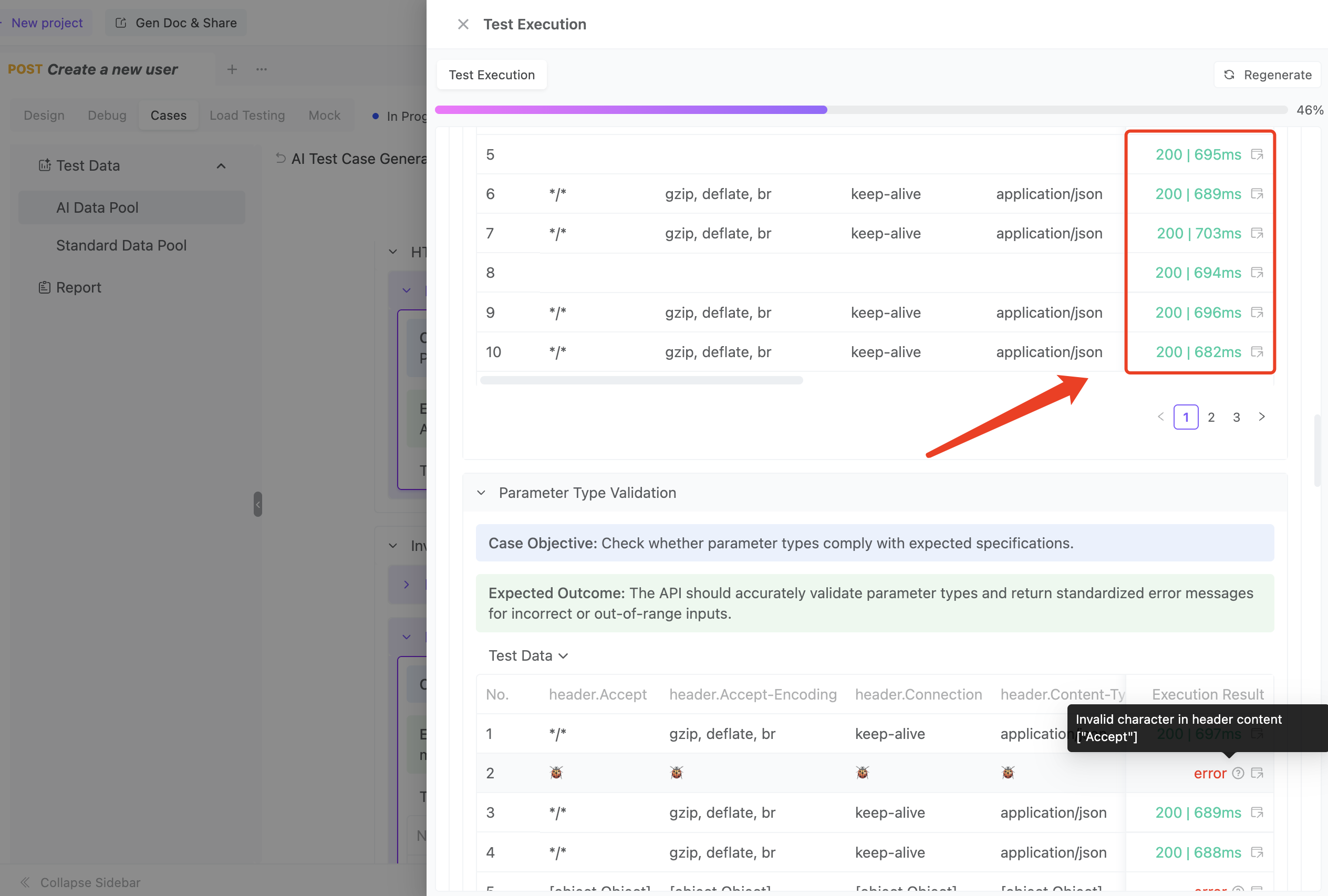

- Run the suite and let EchoAPI generate a clean pass/fail report.

AI-Driven Test Data Generation

Even with well-thought-out cases, humans miss things. That’s where AI steps in.

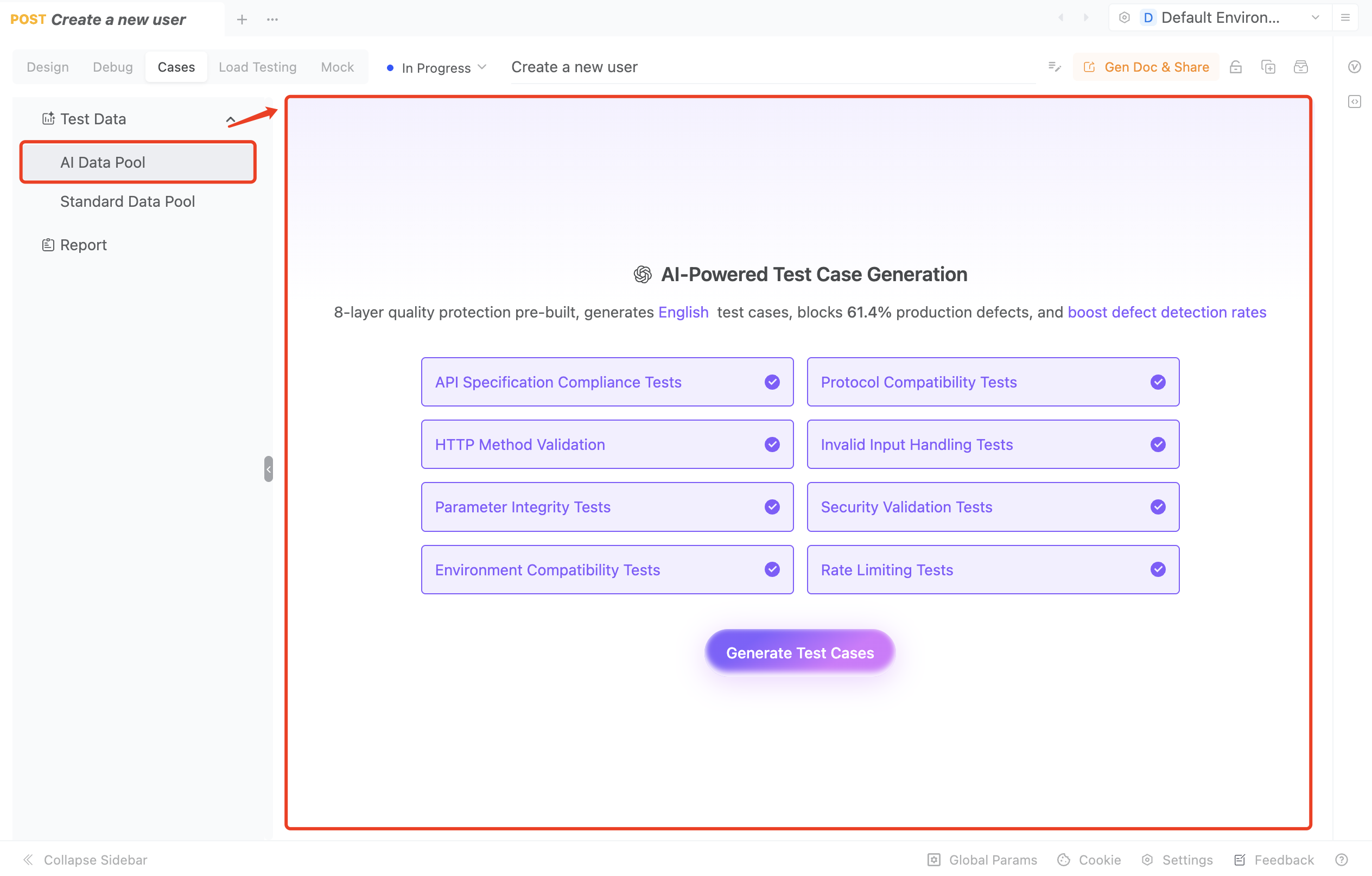

Modern best practice: AI-generated test cases

Imagine this: you provide your API schema, hit “Generate Test Cases with AI”, and seconds later you’ve got a massive suite of scenarios across eight dimensions—functionality, boundaries, edge cases, invalid types, injection attempts, missing fields, encoding issues, and more.

- Comprehensive: AI generates extreme cases developers usually forget—SQL injection strings, malformed data, missing parameters, etc.

- Efficient: What would take hours manually gets done in one click, stored in a centralized AI Data Pool for easy reuse.

Part 3: Best Practices – Manage Data Like a Pro

- Isolation & Uniqueness – Never reuse fixed test data. Generate unique identifiers to avoid collisions.

- Realism – Use libraries like

faker.jsto simulate realistic names, emails, and phone numbers. - Centralized Management – Store data in environments, globals, or external files. One change, everywhere updated.

- Automation & CI/CD – Integrate EchoAPI tests into Jenkins, GitHub Actions, or any CI/CD pipeline. Run tests automatically on every commit.

Final Thoughts

Stop leaving API reliability up to luck and a handful of manual checks. Your approach to test data reflects your engineering maturity.

With EchoAPI, parameterized testing, data-driven workflows, and automation are easier than ever. Treat your test data like code—version it, manage it, scale it.

So go ahead: rework your test suites, fuel them with high-quality, diverse data, and forge APIs that can handle anything thrown their way.

Happy testing—and ship with confidence. 🚀

EchoAPI for VS Code

EchoAPI for VS Code

EchoAPI for IntelliJ IDEA

EchoAPI for IntelliJ IDEA

EchoAPl-Interceptor

EchoAPl-Interceptor

EchoAPl CLI

EchoAPl CLI

EchoAPI Client

EchoAPI Client API Design

API Design

API Debug

API Debug

API Documentation

API Documentation

Mock Server

Mock Server